🧠 Unlocking the Mind of AI: System 1 and System 2 Thinking in Large Language Models

Ai engineer

🔍 Summary: Unlocking the Mind of AI — System 1 & System 2 Thinking in LLMs This article explores how large language models (LLMs) like ChatGPT mirror human cognitive processes using System 1 (fast, intuitive thinking) and System 2 (slow, analytical reasoning), as introduced by psychologist Daniel Kahneman. LLMs typically excel at System 1 tasks such as quick responses and text generation, while System 2 functions—like step-by-step reasoning and complex problem-solving—are supported through techniques like Chain-of-Thought prompting, System 2 Attention, and knowledge graphs. Combining LLMs with structured AI systems like knowledge graphs enhances reasoning, accuracy, and explainability. The synergy of both systems enables AI to tackle sophisticated tasks, from education to diagnostics. However, challenges remain, including high computational costs, ethical concerns, and bias. The article calls for the responsible development of hybrid AI that balances intuition and logic—thinking like humans, but with distinct limitations.

🧠🦾 Unlocking the Mind of AI: System 1 and System 2 Thinking in Large Language Models

Ever typed your password so fast your fingers moved on their own? That’s System 1—quick, automatic, effortless. Now, think about creating a secure password. You pause, weigh options, and plan carefully. That’s System 2—deliberate and analytical. Surprisingly, today’s large language models (LLMs) and other AI systems are mimicking these human mental processes, blending rapid responses with deep reasoning. In this post, we’ll explore how LLMs and knowledge graph-based AI align with System 1 and System 2 thinking, their synergy, and AI’s future. Whether you’re an AI enthusiast or just curious, let’s unpack how these systems are becoming eerily human-like.

✍🏻 What Are System 1 and System 2 Thinking?

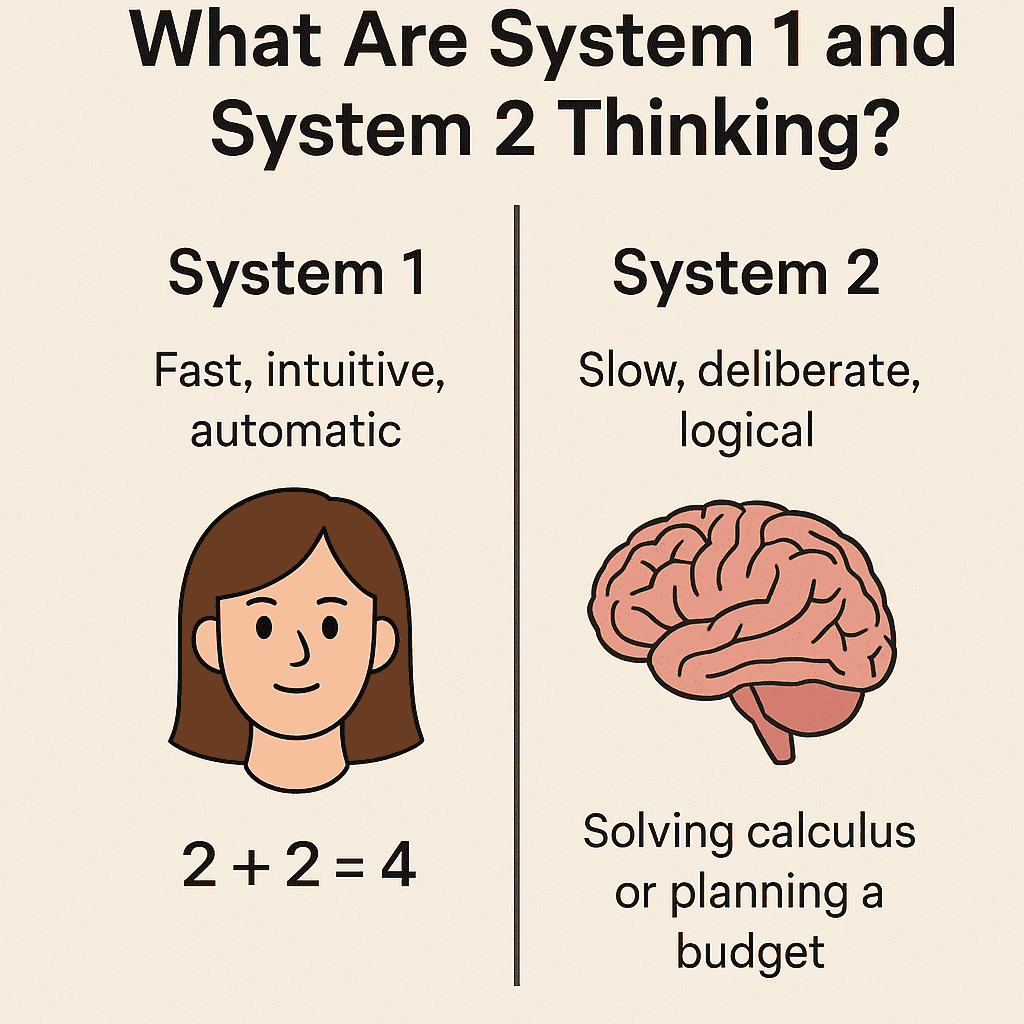

Psychologist Daniel Kahneman’s Thinking, Fast and Slow introduced System 1 and System 2 thinking:

- System 1: Fast, intuitive, automatic. It’s recognizing a friend’s face or answering “2 + 2” instantly.

- System 2: Slow, deliberate, logical. It’s solving calculus or planning a budget.

These systems balance speed and precision in human cognition. As Daniel Kahneman noted on the Lex Fridman Podcast, System 1 drives instinctive decisions, while System 2 handles complex reasoning. Researchers now see parallels in AI, with LLMs excelling at System 1 and other systems like knowledge graphs enabling System 2.

👀 System 1 and System 2 in AI

LLMs like ChatGPT, Gemini, or Claude, and knowledge graph-based AI systems, process information differently. LLMs lean on System 1-like pattern recognition, while knowledge graphs align with System 2’s structured reasoning. Their combination could mirror human cognition’s dual systems.

👾 System 1 in LLMs: Fast and Intuitive AI

Ask an LLM, “What’s the capital of France?” and it instantly replies, “Paris.” That’s System 1—relying on patterns from vast training data for rapid answers. A 2025 survey notes LLMs shine in:

- Text completion: Filling in sentences based on context.

- Language translation: Converting phrases using associations.

- Simple Q&A: Delivering facts from their knowledge base.

Imagine chatting about a sci-fi novel. An LLM might suggest Dune if you love Foundation. This speed is efficient but can lead to “hallucinations” when faced with novel queries, much like human System 1 can misjudge based on ingrained patterns (e.g., political loyalty overriding logic).

🧠 System 2 in AI: Deliberate Reasoning

For complex tasks like solving “What is 15% of 240?” or analyzing data, System 2-like reasoning is needed. LLMs and knowledge graph-based AI tackle these differently but complementarily.

🤖 LLMs with System 2 Techniques

Advanced LLMs like OpenAI’s o1/o3 or DeepSeek’s R1 use techniques to mimic System 2. A 2024 study shows these boost performance in models with over 62 billion parameters. Key methods include:

- Chain-of-Thought (CoT) Prompting: The model outlines steps, e.g., solving 15% of 240:

- Convert 15% to 0.15.

- Multiply 0.15 by 240 to get 36.

- Branch-Solve-Merge (BSM): Splits tasks into sub-tasks (e.g., assessing a paper’s clarity, novelty), evaluates each, and merges results.

- Agent Architectures (Talker-Reasoner): A fast “talker” handles quick responses; a slower “reasoner” tackles complex tasks.

- System 2 Attention (S2A) Prompting: Filters irrelevant context to focus on key facts, boosting factual accuracy from ~63% to 80% (PromptHub, 2024).

- Monte Carlo Tree Search (MCTS): Explores reasoning paths for optimal solutions.

- Reinforcement Learning (RL): Fine-tunes logical reasoning.

For example, S2A might handle, “I think Johnny Depp was born in Kentucky. Where was he born?” by ignoring the guess and answering “Owensboro, Kentucky.”

🕸 Knowledge Graph-Based AI: System 2’s Structured Approach

Knowledge graph-based AI, unlike LLMs, stores information in interconnected nodes, representing concepts and relationships. This mirrors System 2’s methodical reasoning by:

- Analyzing Relationships: Searching structured data to connect facts logically.

- Ensuring Explainability: Providing auditable reasoning paths, unlike LLMs’ opaque outputs.

- Handling Complex Queries: Integrating diverse data for well-considered answers.

For instance, solving “27 × 14” requires deliberate steps, unlike recalling “2 × 2.” Knowledge graphs methodically process such tasks, similar to humans planning a project. However, they lack the human ability to imagine abstract or counterfactual scenarios, limiting their creative reasoning.

📃 Synergy and Cognitive Dissonance

Humans experience cognitive dissonance when System 1 and System 2 clash, like when new evidence challenges a belief. LLMs lack this self-challenging mechanism, leading to confident but wrong outputs (hallucinations). Knowledge graphs, with their structured logic, can complement LLMs by providing a System 2-like check, reducing errors. Combining both creates a robust AI system, much like humans need both systems to navigate life.

🦾 A Relatable Scenario: AI as Your Study Buddy

Imagine studying physics and asking about relativity. An LLM’s System 1 response (via the talker agent) might say: “Relativity is Einstein’s theory about space, time, and gravity.” A System 2 response, using CoT or knowledge graphs, explains:

- “Picture a train near light speed—time slows for passengers.”

- “Spacetime bends like a rubber sheet, warped by stars.”

S2A ensures the LLM ignores irrelevant context (e.g., “I heard relativity is confusing”). A knowledge graph might add precise relationships, like linking relativity to gravitational lensing. A 2024 case study showed students using reasoning-enabled AI scored 15% higher on problem-solving tasks.

🧠 Why System 2 Matters for AI’s Future

System 2 advancements in LLMs and knowledge graphs are transformative:

- Higher Accuracy: A 2025 survey notes models like o1/o3 rival human experts in math and coding.

- Bias Reduction: Deliberative reasoning questions outputs, minimizing biases.

- Broader Applications: From legal analysis to diagnostics, System 2 enables nuanced tasks.

- Explainability: Knowledge graphs offer auditable reasoning, crucial for trust.

However, System 2 is resource-intensive. A 2024 paper on “System 2 distillation” suggests training models for faster reasoned outputs, blending System 1 and System 2.

💡 Challenges and Ethical Considerations

Challenges include:

- Computational Cost: System 2 demands heavy resources, limiting access.

- Bias and Fairness: A 2024 survey notes LLMs and graphs can inherit biases, needing mitigation.

- Ethical Use: Misinformation risks require regulations.

In academia, a 2024 study found 87.6% of researchers know about LLMs, but 40.5% don’t disclose their use, raising transparency concerns.

🔐 Practical Tips for Using AI

Maximize LLMs and knowledge graphs:

- Simple Prompts for System 1: Use short prompts for quick answers (e.g., “Define gravity”).

- Detailed Prompts for System 2: Ask for “step-by-step” reasoning or “filter irrelevant context” to engage CoT, S2A, or graphs.

- Verify Outputs: Cross-check for critical tasks.

- Stay Ethical: Disclose AI use in professional work.

What’s Next for System 1 and System 2 in AI?

The future lies in integrating LLMs’ System 1 speed with knowledge graphs’ System 2 depth. Multimodal models combining text, images, and structured data are emerging. Imagine an AI analyzing a medical scan, reasoning with a knowledge graph, and explaining findings in real-time. As an X post noted, “Nine months ago, LLMs were System 1 only. Now, System 2 shines with CoT, BSM, and Talker-Reasoner.” Hybrid systems could mimic human cognition, balancing intuition and logic, but ethical development is crucial.

✨ Conclusion: Thinking Like Humans, But Not Quite

Just as humans rely on both System 1’s quick instincts and System 2’s deep reasoning, AI needs both LLMs’ rapid inference and knowledge graphs’ structured logic. This synergy is paving the way for intelligent LLM agents that tackle complex problems with minimal human oversight, from planning projects to analyzing data. Yet, unlike humans, AI lacks the spark of imagination and cognitive dissonance that drives learning and growth. As we advance toward 2025, let’s build AI that balances both systems while preserving the human essence that makes us unique. Have you used AI for a tough task? Did its reasoning impress or surprise you? Share in the comments! Explore our posts on AI in Education or The Ethics of AI for more.

References

- Li, Z.-Z., et al. (2025). From System 1 to System 2: A Survey of Reasoning Large Language Models. arXiv, 2502.17419.

- Dwivedi, Y. K., et al. (2024). Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative AI. ScienceDirect.

- Caliskan, A., et al. (2024). Bias and Fairness in Large Language Models: A Survey. Computational Linguistics, MIT Press.

- Large language model. (2025). Wikipedia. https://en.wikipedia.org/wiki/Large_language_model

- Kimmonismus. (2024, September 18). [Post on X about LLM evolution].

- Cleary, D. (2024, August 28). How to Use System 2 Attention Prompting to Improve LLM Accuracy. Medium. https://www.prompthub.us/blog/how-to-use-system-2-attention-prompting-to-improve-llm-accuracy

- Smith, J. (2024, October 15). System 1 and System 2 Thinking in AI: LLMs and Knowledge Graphs. TechBit. https://www.techbit.com/system-1-system-2-ai-llms-knowledge-graphs